Cloud Native and Kubernetes Security Predictions 2024

By Andrew Martin

- Global conflict and economic tightening pressures security departments

- AI supply chain uncovered as ticking time bomb

- Quantum computers finally challenge elliptic curve cryptography

- Mutating AI threat landscape brings new horrors to traditional security concerns

- Novel identity and access mechanisms overtake legacy IAM approaches

- Common Cloud Controls battles misconfiguration, the greatest source of cloud insecurity

- “Open Source” AI fails in deterministic reproducibility at scale

- Hybrid and multi cloud migration precipitate little change

- Supply chain vulnerabilities intensify as vendors sign SBOMs and VEX documents

- Rust ensures longevity with user-facing code in Linux kernel

- WebAssembly’s component model revolutionises language interoperability

- Confidential Computing enhances private compute on untrusted hardware

- CISOs and employers increasingly penalised and cybersecurity insurance descopes ransomware and negligence

- Open Source regulations and licensing continue to confound

Global conflict and economic tightening pressures security departments

Last year was characterized by the greatest escalation in global conflict since 1945, and geopolitical sabre-rattlings indicate that this will only intensify in 2024. As forty percent of the world’s democracies are anticipated to hold elections, heightened tensions may lead to an increased attack velocity against critical national infrastructure in conflict zones and beyond.

Image Source: Stability.ai

Nation-state actors continue to optimise guerrilla cyberwarfare where physical strength is imbalanced, as attacks on critical systems are utilised as part of asymmetric warfare. Responsible ethical hacking in conflict zones from both participants and civilians now have rules of engagement. Calls to establish a Digital Geneva Convention will undoubtedly develop, as International humanitarian law (IHL) already provides rules that all hackers operating in armed conflicts must follow, both to protect civilians and limit the proliferation of warfare. These rules include not targeting civilian objects, avoiding any indiscriminate damage to objects, minimizing collateral harm to civilians, not attacking medical and humanitarian facilities, and not making threats of violence or inciting violations of IHL.

Economic tightening — from physical supply chain issues and challenges to global shipping safety — impacts reduction in force edicts from publicly-listed organisations, which in turn impacts budget allocation in already overstretched security teams.

AI supply chain uncovered as ticking time bomb

AI supply chains present an expansive attack surface that spans the entire AI lifecycle — from development artefacts, through model tampering and poisoning (including undetectable Sleeper Agents), to safety of the runtime environment with prompt jailbreaking, injection, availability and integrity violations, and model inversion — necessitating firewalling AIs from user input.

As industry and academia race to realize the promises of generative AI for profit and research, these unique adversarial techniques may pose a significant threat to operational integrity of systems relying on generative AI. Security leaders must account for model monitoring, anomaly detection, data protection, data management, and AI-specific application hardening as essential components of their security strategies.

Image Source: DALL-E 3 3 and Clipdrop

The PyTorch dependency confusion attack at the end of 2022 embodies a low-impact case of these security considerations: had dependent projects auto-built and deployed the packages, deployments of innumerable AI projects and their data would be vulnerable, as the attacker gathered authentication information and the contents of users’ home directories. The apparent trend of pushing containers and packages from laptops and under-desk towers will not abate, as the packaging and distribution of core components is not universally well-secured.

The opaque binary blob of firmware distributed by GPU maintainers prevents effective scrutiny of its security (as with CPU microcode), and thus tamper-proofing of AI workloads on compromised networks remains difficult to determine. NVIDIA is investing more in confidential computing for GPUs, enabling similar properties to those CVMs and enclaves provide on CPUs, and data transfer from CPU to GPU can be encrypted. This doesn’t mitigate trust in the GPU and its firmware, but will improve trust when using GPUs in shared environments.

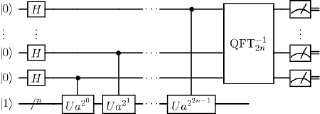

Quantum computers finally challenge elliptic curve cryptography

December 2023 yielded a quantum breakthrough as a team from DARPA and Harvard University achieved an error-correction breakthrough in quantum circuits. This stability enhancement is the start of a journey to run algorithms on the platform, paving the way for larger quantum computers capable of cracking the Elliptic Curves Discrete Logarithm Problem (ECDLP) which underpins Elliptic-curve Cryptography — and so breaking Diffie-Hellman key exchanges (used at the start of a modern TLS handshake, by SSH, messaging apps, and PGP) and RSA.

The advent of potentially running Shor’s Algorithm brings this into stark relief and rationalises the internet-scale quantum-resistant cryptographic experiments undertaken by major players like Google (introducing ECC+Kyber in Chrome) and CloudFlare (securing huge swathes of their backend connections and forking BoringSSL to add hybrid post-quantum key exchange). With a NIST programme for the development of post-quantum algorithms ongoing, the incremental migration between algorithms will accelerate.

New cryptography will require extensive vetting for unforeseen weaknesses: algorithms from the Cryptographic Suite for Algebraic Lattices (CRYSTALS) include CRYSTALS-Kyber and CRYSTALS-Dilithium, and leverage lattice-based cryptography for quantum resistance, while FALCON and SPHINCS+ offer unique advantages in signature size and approach. Existing symmetric algorithms can increase their attack tolerance by increasing key length.

Kubernetes is already capable of supporting quantum workloads with Qiskit (which parallelises and recombines quantum compute workloads), and as Quantum Key Distribution in Kubernetes Clusters integrates the Quantum Software Stack (QSS), cryptographic keys can be exchanged in a manner unsusceptible to quantum computation in software management stacks.

The Kubernetes control plane’s migration to quantum encryption will be enabled by the Golang Crypto library implementing X25519Kyber768 sometime after version 1.24.

This urgency for post-quantum cryptography is propelled by the need to safeguard sensitive communications from the imminent quantum era. The encrypted data being transmitted over internet backbones today is undoubtedly being recorded en-masse and stored, awaiting the moment that its ciphers can be attacked in a reasonable amount of time.

Signal will continue to lead the way in quantum-resistant instant messaging, after running both DH and quantum-resistant secondary algorithms in parallel with PQXDH (an upgrade to the X3DH specification that combines the X25519 and CRYSTALS-Kyber algorithms to compute a shared secret), and foreseeing the need for privacy from future quantum machines.

The adoption and integration of new ciphers will be critical for maintaining the confidentiality and integrity of our digital information. But it’s not imminent in 2024: while error rates and numbers of physical and logical qubits are rarely advertised, they have a significant impact on actual feasibility — the latest quantum model from IBM has 10^3 qubits, and current algorithms require ~10^7 to break ECC in 24 hours.

Mutating AI threat landscape brings new horrors to traditional security concerns

Generative AI is no less at risk from Adversarial AI techniques than its methodological predecessors, and presents additional exploitable attack surfaces. 2023 saw generative AI used for various nefarious social engineer purposes, including virtual kidnapping (vocal imitation used to record the target’s voice and replay it to their family), deep fakes (confusing and confounding voters and other unaware of the provenance of the video), phishing (highly-personalised Business Email Compromise), and synthetic identity fraud (generating a new identity using collected metadata and AI).

Image Source: DALL-E 3 3 and Clipdrop

Identity Verification suppliers that link a government-issued ID to an individual will be outpaced by synthetic images, as AIs are able to accurately spoof the control image on file, and CAPTCHAs may be considered effectively broken, or still out-sourceable to a remote human-powered call centre.

Military AI underwent some human-centric hardening, as the 2023 Responsible AI in the Military Domain (REAIM) summit agreed to keep humans in the loop for decision-making in any AI powered weapon. This is unlikely to be universally accepted, especially amongst insurgent or resistance movements, and the fervent integration of autonomous weaponry into the Ukraine conflict will spill out into weapons programs globally. Dumb munitions will gain independent target acquisition and chase capabilities similar to the close-range loitering munitions deployed in 2023.

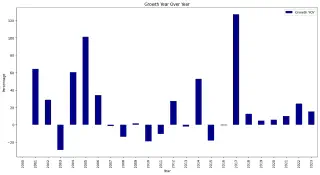

AI may be harnessed for good too: DARPA challenges of previous years, challenging models to auto-exploit machine code and auto-patch binaries, have set the scene for AI’s expected future capabilities, as automated attack systems probe internet-facing systems for weaknesses, and the yearly CVE count keeps on rising (although fewer “criticals” emerged this year). DARPA have a new 2 year AI Cyber Challenge that looks to continue this work to develop new cybersecurity tooling, and the trend of incorporating explainable AI to rationalise results and mitigate nondeterminism will enter the legislature.

Application and infrastructure AI security monitoring will continue to grow rapidly, with a significant focus on AI-driven risk assessments and integrations with DevSecOps workflows. End users will use AI to escape the purgatory of vulnerability management and lower their mean time to remediation for wide-scale CVEs and exposures by sharing supply chain security information with vendors in the same way Palo Alto firewalls have been doing for networks.

Source: JerryGamblin.com: CVE Growth

AI-customised and obfuscated payloads will dominate binary analysis libraries and systems, with the fingerprints and detections we are used to for EDR subject to novel evasion techniques. This will herald a new era where traditional binary analysis is much harder for humans, but malicious scripts, tooling, and vulnerability exploitation detection become easier challenges for AI to solve.

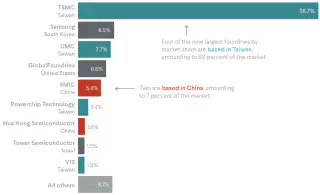

Developing these new AI capabilities, and training generative adversarial networks (GANs) to respond to them, will be the a new arms race — one limited by the nanometer lithography of GPU chips. Export controls on US chips to China, the protection of Taiwanese chipmaker TSMC from Chinese repatriation, and potential fallout from changing long-standing senior management at ASML (the company that builds fabrication machinery for TSMC) highlight the burgeoning instability in AI advancement’s most crucial sector.

Source: CFR research

Rumours that Sam Altman is looking to rival NVidia by establishing a chip firm with Cerebras Systems bring this silicon race into stark relief. Cerebras’ Wafer Scale Engine is an 850,000 core, 7nm chip the size of a dinner plate, packing 2.6 trillion transistors and powering a 4 exaFLOP supercomputer that promises 10x AI training speedups. Current GPUs hold ~60 billion transistors, 2.5 trillion fewer — hardware will win the next phase of the AI war.

Novel identity and access mechanisms overtake legacy IAM approaches

Identity and Access Management (IAM) is in need of transformation as the failings of traditional mechanisms like passwords, tokens, 2FA/MFA, and password managers are scrutinized in light of newer advancements, which governing bodies may turn their eye to after attacks on IdPs in 2023 including Okta (compromised through a personal account on company laptop) were used to gain access to further systems.

Image Source: Stability.ai

Passkeys offer a mechanism to replace traditional challenge-based MFA altogether, assuming the user already has the second factor by virtue of utilising the passkey from a mobile device (again assumed to have strong second factor authentication like a screen code or biometric integrations). They are unique public-key cryptographic tokens, coded against the specific website and device, that defend against phishing by negating password strings. This is akin to placing OTP codes into a password manager — the assumption of any remotely held authentication being superior to a system holding a hashed user/password pair that may be broken by today or tomorrow’s brute-force encryption (Hashcat’s crown is under threat from AI tools like PassGAN).

A lack of OAuth controls and detections is common to both machine and human identity systems, and stolen or leaked tokens and identities for SaaS products like Github will continue to leak, as with CircleCI, Slack, and Microsoft AI. Eternally-persistent OAuth tokens may permit indefinite access to applications. Third parties like CloudFlare are able to detect some of these OAuth attacks due to their expansive internet presence, but better usage monitoring is needed by end users to effectively detect these attacks. Zero Trust approaches significantly reduce the risk of credential theft or reuse, and growing SPIFFE usage will continue as Google Cloud has adopted it for federated identity, and a modified internal version for all machine identity in its own GCP control plane.

More widely, machine authorisation has suffered from credential reuse, and Kubernetes is no exception. Never-expiring and irrevocable bound service account tokens (BSAT) demonstrate the risk of persistence an attacker may enjoy, and misconfiguration in RBAC continues to be a key threat. Kubernetes’ autonomous operator pattern often imbues its workloads with far greater privilege than required, and the ability to root a cluster via excessive permissions of third-party apps will persists as applications are shipped with a focus on functionality and not their potential for malicious escalation.

An alternative to standard RBAC authorisation in Kubernetes is kube-rebac-authorizer, which defines and walks relationships in a graph, enforcing them with OpenFGA, an implementation of Google’s Zanzibar project — which also inspired the similar Next Generation Access Control (NGAC). NGAC overlays policy onto an existing representation of a system, and can model RBAC and ABAC whilst offering a high level of granularity, consistent graph resolution time, and auditability. We will see more of these faster, less complex authorisation frameworks as they reduce the complexity of hand-cranked YAML with higher-level validatable and testable DSLs.

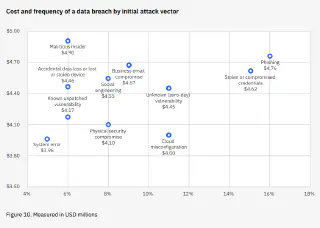

Common Cloud Controls battles misconfiguration, the greatest source of cloud insecurity

Misconfiguration remains a leading cause of cloud insecurity according to IBM’s Cost of a Data Breach Report 2023, coming in behind phishing and stolen or compromised credentials. Misconfiguration arises from the complex and dynamic nature of cloud environments, where simple configuration slips can create gaping security holes.

Source: IBM Security

The focus on automated defences is key to mitigating this persistent challenge, highlighting the importance of continuous and automated cloud control measures, which the FINOS group provides in its Common Cloud Controls project. This brings unified cybersecurity, compliance, and resiliency standards for regulated public cloud deployments, and is an open project proposed by Citi. The project was based on an exhaustive threat model of each individual cloud service, the interplay between services, and the controls available from the top of the cloud account down to the individual organisation.

Its goal is to develop a unified taxonomy of common cloud services and their associated threats, including their Kubernetes orchestrators, leaning on existing threat definition work from MITRE and associated parties to enable continuous DevSecOps-style security approaches. Unified and automated security programs will continue to grow as they are the greatest preventative and cost-saving measure in the face of data breaches, reducing the financial damage by an average of $250,000.

Source: IBM Security

Current topics of CCC include “Analytics, Compute, Containers, Cost and Billing, Databases, Developer Tools, Integration, Machine Learning, Management and Governance, Migration, Monitoring, Network, Security, and Storage” — each cross-referenced against their equivalent services in GCP, AWS, and Azure. CCC will look to codify its control testing with Open Security Controls Assessment Language (OSCAL), a standardised and machine-executable format from NIST that can be automated to run continuously and validate the security posture of all deployed cloud infrastructure. This dramatic advance in cloud security will help to secure large and small cloud deployments alike and standardise the controls that we have available to us across providers.

“Open Source” AI fails in deterministic reproducibility at scale

The debate around Open Source AI and its deterministic nature remains contentious, with questions around the reproducibility of AI models and the inherent uncertainties in training processes, especially given the complexities and variabilities of massive distributed computing systems.

Source: Nature: Is AI leading to a reproducibility crisis in science?

“Open Source” AI will remain a contested definition. The foundational quandary of whether AI models can ever be deterministic will linger alongside general concerns around legal (as NYT sues Microsoft and OpenAI), licensing (as Meta’s LLaMA2 model is decried by the Open Source Initiative), and intellectual property (the US Copyright Office rescinded copyright for AI-generated works that were insufficiently crafted by humans).

Purely statistical models without stochastic elements may be reproducible — with fully deterministic build and training code — but most models with stochastic elements are not intended to be accurately reproduced in multithreaded, asynchronous, and highly-parallelised environments, and often randomise training data input. These models are trained on massively distributed systems, with the natural jitter and non-determinism that entails: from the processor though to I/O and network fabric. This can be averted with single-threading and careful management of random seeds, algorithms, hardware, and inputs, as GPU are not guaranteed deterministic for many parallel vector operations. They are being used beyond originally conceived use cases, and like CPU branch prediction problems seen in SPECTRE and Meltdown, there is a price to pay for many optimisations.

Even with fully deterministic build and training systems such as Framework Reproducibility (fwr13y), the raw compute cost required for training a model brings into question whether Open Source expectations — to reproduce an artefact from the source material — are possible with LLMs and AI models. Complete hardware, source data, software, training, and tuning parameters details are rarely released together, and reproducing others’ academic or industrial papers can fail for reasons from errors and omissions in the papers, through to inconsistent measurements or methodologies.

Hybrid and multi cloud migration precipitate little change

Limited adoption remains the norm, often being driven by specific regulatory or competitive circumstances rather than a general trend. Legislation including the EU’s Digital Operational Resilience Act (DORA), the Bank of England’s Operational Resilience Guidelines are requiring organisations to alleviate the systemic risk of cloud concentration, where a widely deployed misconfiguration can impact the entirety of an organisation’s cloud estate, leading to service degradation and business continuity concerns.

There are high costs to redundant low-utilisation infrastructure, and staffing for multiple deployment targets is difficult due to the intricacies of deploying to each vendor, so outside those required to conform, hybrid cloud approaches will remain few and far between. The Common Cloud Controls project, addressed below, was started in response to these multi-cloud security concerns.

Unless regulations or competition force change, these strategies are unlikely to see widespread use beyond regulated sectors unless as part of a cloud-repatriation strategy in the face of rising cloud costs. In those instances, burst compute to the cloud for low bandwidth and volume workloads is still practical to alleviate the load on on-premise datacentres.

Supply chain vulnerabilities intensify as vendors sign SBOMs and VEX documents

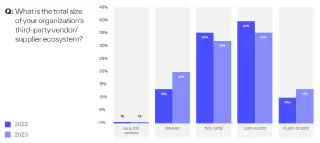

The difference between image scanning and Software Bill of Materials (SBOMs) becomes more pronounced as supply chain vulnerabilities intensify, making up 12% of breaches in 2023 according to Blue Voyant, as organisations continue to expand their supply chain and vendors.

Source: The State of Supply Chain Defense: Annual Global Insights Report 2023

SBOMs provide detailed software component lists, unlike image scanning which takes that installed software list and analyzes vulnerabilities within images, and 33% of Blue Voyant’s respondents utilise a SBOMs to manage supply chain cyber risk.

The reliance of security scanning on correct discovery is paramount, with different formats choosing to adopt different stances on including vulnerability information within SBOM documents. As vulnerabilities are constantly evolving and new CVEs issued, the question of whether an SBOM is a point-in-time build evidence, or a living representation of the security state of the described software remains unanswered. The presence of the API-driven Open Source Vulnerabilities (OSV) database suggests we should continually revalidate the vulnerabilities listed against the versions of software in SBOMs.

The integration of vulnerability registries, attestations, and tools like Sigstore and OpenPubkey cement signing’s place in the security ecosystem, as package managers including Homebrew, npm, cargo, and PyPI move towards Sigstore en masse.

Early movers in the Vulnerability Exploitability eXchange (VEX) space include Kubescape and Kubevuln, Aqua’s Trivy, and Red Hat. More generally, trust issues around who generated and signed a VEX proliferate from SBOMs, as signed documents shouldn’t be a replacement for composition analysis and security monitoring of the software an organisation ingests.

Rust ensures longevity with user-facing code in Linux kernel

The adoption of Rust into the Linux kernel again storms ahead, since its inauguration in the Linux 6.1 release when patches enabling Rust for writing drivers were merged. The first user-visible Rust code may be merged in Linux 6.8 release, marking a significant milestone in the language’s adoption. Once that code is present, due to Linux’s extreme backwards compatibility, it will have to be rewritten in another language to be removed — that unlikelihood means that Rust will be able to build upon its success to broach a future challenge, which will be a new Rust compiler based on GCC. This isn’t the focus of the core Rust community, and wider consensus on the best way forward is yet to be reached.

gccrs is looking to fill that void but is the next step in a journey that may take another couple of years to fulfil. Rust will spread throughout the open source ecosystem as per US Government requirements for critical memory safe infrastructure, where they state memory safety vulnerabilities are “the most prevalent type of disclosed software vulnerability”.

Research shows that roughly 2/3 of software vulnerabilities are due to a lack of ‘memory safe’ coding. Removing this routinely exploited security vulnerability can pay enormous dividends for our nation’s cybersecurity but will require concerted community effort and sustained investment at the executive level

— Jen Easterly, CISA Director

The Rust ecosystem is continuing to mature with support from developers and vendors. Its compilation-time security guarantees make it attractive for developers to rewrite critical components, not only of the Linux Kernel but also critical internet-scale infrastructure under Prossimo (an Internet Security Research Group (ISRG) project working on Rust implementations of TLS, AV1, sudo/su, NTP, Apache, DNS, curl, and other tools), and daily command line tools. And the Rust Foundation will extend work on a nascent formal specification of the Rust programming language under the Specification Team’s RFC , to make Rust more widely adoptable.

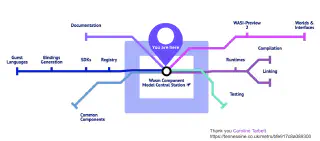

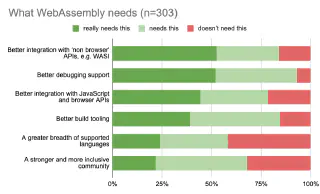

WebAssembly’s component model revolutionises language interoperability

With inherent strengths like hardware neutrality and extreme portability, WebAssembly (WASM) will increasingly move beyond web into server-side applications. Its ecosystem is growing rapidly, with production support flourishing in major browsers and languages (including C/C++, Rust, Go, C#, Lua, COBOL, with many others usable). Its near-native performance makes it an attractive choice for high-performance applications in fields like gaming, video editing, scientific computing, and AI.

Source: The Linux Foundation: Keynote: Are We Componentized Yet?

Component model development is paving the way for broader use of WASM beyond web browsers and servers, enabling rapid cross-language compatibility and modularity as WASM binaries can treat each other like libraries, regardless of their source language. This means that WASM can now be used to build a wide range of interoperable applications from their existing transpiled code. WASM will be used in everything from web apps to mobile, desktop, edge, and IoT devices, and is poised to upend software deployment as it breaks down the silos of current package ecosystems.

Methodical advancements to WASI (WebAssembly System Interface) hindered adoption in 2023, but the standard will verge on completion in 2024. This interface defines all of a WASM app’s interactions with the outside world, including networking, file I/O, IPC, and other system-level operations necessary for many applications that affect its use for non-browser applications. Its secure-by-design sandbox model has no capabilities enabled by default, and so sensitive memory and resources are unavailable to hostile users unless required by the application.

Source: Scott Logic: The State of WebAssembly 2023

Pending some vital additions that are still underway (including threads, GC, exception handling, and debuggability within the sandbox), the core technology will remain complex, but external tooling and vendor advancements will increasingly offer ease-of-deployment alternatives that will bring new users into the community. A browser-based Adobe Flash runtime can’t do any harm either, or at least not as much as Flash did the first time round.

Confidential Computing enhances private compute on untrusted hardware

Confidential compute workloads isolate trusted computations within Trusted Execution Environments, securing sensitive data in use from hostile software, protocol, cryptographic and most other attacks (excluding invasive physical access). Its adoption within AI and privacy-critical applications is expected to grow, and there is great promise in use of the technology to comply with data sovereignty and locality regulation as the major cloud providers all offer implementations.

As major cloud providers don’t support boot chain verification, privacy must be considered under the shared responsibility model and the user’s trust in the provider. Exciting progress in this domain is AWS’s open sourcing of their UEFI, bringing us one step closer to verifying measurements and signatures taken during boot.

While Confidential Computing deployments may still be vulnerable to certain hardware attacks, no technology is immune to prolonged physical attack: all systems are vulnerable to a sufficiently resourced and motivated attacker. Hardware constraints may hinder hobbyist and more widespread adoption as most TEE implementations currently exist in server-grade hardware, but other form factors are becoming available (in Arm-based cores and in GPUs, for example).

Source: Wikipedia

The Kubernetes-based Confidential Containers project, which encrypts everything from the TPM to all data in use by using Trust Execution Environments and powered by Kata Containers is one example of an open source approach to Confidential Computing (others, for example, can be found under the Confidential Computing Consortium), and continues to advance steadily. The key broker service will potentially integrate with SPIFFE for hardware root of trust attestation, and future Confidential Clusters will support a Kubernetes cluster where all nodes run confidential VMs.

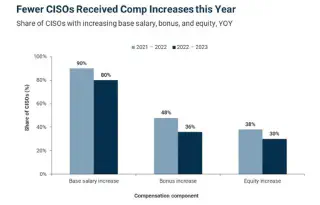

CISOs and employers increasingly penalised and cybersecurity insurance descopes ransomware and negligence

Cybersecurity insurance policies will shift to descope ransomware and negligence in the maelstrom of escalating ransomware attacks. The industry and regulatory agencies continue to be hit by expanding cyber risks, underscored by incidents such as the $1.4 billion settlement for Merck, along with the Danish shipping giant Maersk incurring financial damage of around $300 million. The global damage inflicted by the NotPetya attack is estimated to reach around $10 billion.

As governments have introduced fines for data breaches, negligence has become less tolerable for insurers: Lloyds implemented requirements for cyber insurance policies in March 2023, including clear clauses on state-backed cyberattacks and war exclusions. Insurers can now limit or exclude ransomware coverage based on individual policies, although the Financial Conduct Authority (FCA) is investigating unfair terms and conditions in insurance policies, which could influence or restore ransomware coverage in the future.

Source: IANS Research

SEC will mount its pressure on CISOs to be held accountable for security and cyber incidents, which may have a civil or financial penalty. The SolarWinds SUNBURST-era CISO and other C-levels have been issued with a Wells Notice which doesn’t indicate wrongdoing, but may lead to enforcement action. This behaviour may result in a new or repurposed form of insurance for personal liability, with changes set against the backdrop of remuneration decreasing in a challenging market for security leaders.

Open Source regulations and licensing continue to confound

New Open Source licences and laws will increase the cognitive load on maintainers, as the nuance required to navigate the intricacies of open source regulations may require legal support. The CRA has been released and, after much Open Source caveating, has excluded most open source code. Pressure from groups like the Eclipse Foundation, Electronic Frontier Foundation, Open Source Initiative (OSI), and Linux Foundation has been invaluable in convincing the legislators not to include free and open source maintainers.

The CRA applies to all commercial (or data-harvesting) open source projects, which may impact vendors and large organisations more than maintainers. Maintainers that are paid for supporting a project, with their open source gains spent on non-profit objectives, are happily exempt.

And the Hashicorp BUSL fallout will continue to feed the OpenTofu projects, and continues a line of projects including ElasticSearch, Grafana, and Sentry, and is followed by the Vault fork of OpenBao — an IBM project born of necessity. The team were consuming Vault and realised they wouldn’t be able to continue without restoring the original licence, necessitating a forked project, which will persist as the most viable option in the case of licence changes.

If Hashicorp will fork Hashicorp Configuration Language (HCL) before OpenTofu builds breaking features is perhaps moot, considering the project advanced from fork to GA in four short months and the next versions ponders whether to parameterise backends, providers, and modules in HCL, which will effectively hard fork the module repository too (there’s already a R2-backed module repository for the project separate to Hashicorp’s). With the clear “source open” stance taken by Hashicorp, it seems unlikely that the community will ensure backwards compatibility in the face of competing module registries with different licences.

Enterprises will likely stay with Vault instead of OpenBao for the professional services support, as Vault is a critical production component depended upon by many systems. OpenTofu may bring an opportunity for heavy users to escape Terraform Cloud’s strange and expensive pricing model — the strength of community growth and the trust in it by enterprise will dictate whether these projects receive institutional backing.

As these pincer assaults bring greater restrictions on pure open source from vendors and legislators, it remains to be seen if these changes bring a stifling to innovation and collaboration, the hallmarks of the open source movement. The CRA’s impact on commercial entities potentially dissuades organizations from engaging with open source projects, which could lead to a reevaluation of how companies contribute to and benefit from open source software.

Many thanks to the reviewers.