Trust Issues: Navigating Open Source and Software Supply Chain Risk

Introduction: The Age of Open Source Dependency

Open source software (OSS) is both the not-so-invisible fabric of modern innovation, and a complex cyber attack surface. Over 90% of modern software applications depend on OSS, yet this ecosystem thrives in a community-driven model that was never designed with the rigour of regulated industries or critical infrastructure in mind.

In regulated industries, where trust is non-negotiable and compliance must be embedded in every operational layer, open source may appear opaque due to institutional operating models defined by auditability, compliance, and formal risk controls. Regulated industries are increasingly reliant on code they did not write, do not maintain, and, at times, cannot fully inspect. Innovation demands speed; but regulation demands accountability.

This tension is not abstract. It manifests in open source ingestion at scale, CI/CD pipelines and build systems, artifact repositories, and container images. The result is a growing dissonance between delivery velocity and assurance, one that regulated organisations are required now to address at scale.

This paper, authored by ControlPlane with contributors from Lloyds Banking Group, Innovate UK, and OpenUK explores this challenge from two perspectives: strategic and existential (“why”) and practical and implementation-focused (“how”).

Along the way, we highlight how some enterprises now directly employ OSS core maintainers, ensuring sustainability of critical codebases. We also explore how OpenSSF initiatives (e.g. SLSA, Scorecards) and open source projects (e.g. in-toto, Witness) are shaping the future of software supply chain trust.

Episode 1: “Untrusted Execution: A Risk-Aware Approach to Software Supply Chains In Risk Adverse Organisations”

Authors:

- Francesco Beltramini (ControlPlane)

- John Kjell (ControlPlane)

- Renzo Cherin (Lloyds Banking Group)

The Trust Dilemma

Financial Services Institutions (FSIs) occupy a uniquely exposed position in the Software Supply Chain landscape. Their digital infrastructure qualifies as critical national infrastructure, operates under intense regulatory scrutiny, and is bound by governance models optimised for stability rather than agility.

Yet, they aspire to rely on dynamic, community-maintained software components, built and shipped through ecosystems over which they have limited visibility or control. They have started to rely on OSS to accelerate innovation, enable platform agility, and avoid vendor lock-in. OSS is often freely available and globally maintained, which allows FSIs to benefit from rapid iteration cycles and shared community knowledge, without bearing the full burden of development and maintenance themselves or paying hefty subscription or licence fees to third party commercial vendors.

However, OSS, with its dynamic, decentralised nature, may actually collide directly with their operating model.

This friction is not confined to package selection. It plays out across the entire software supply chain:

- Developers inherit the consequences of trust decisions they did not make

- Legacy governance practices struggle to cope with transitive dependencies

- CI/CD pipelines become blind spots for audit and risk modelling

- Build systems, often stitched together from scripts, SaaS tools, and bespoke automation, become high-risk zones with low visibility / assurance and weak provenance guarantees

In this context, FSIs must navigate the shift from static, binary approval decisions to a layered, contextual trust model. Trust is not granted once. It must be continuously earned, across ingestion, build, and deployment. What must be addressed here is not a tooling or process issue. It is actually a trust issue with Supply Chain.

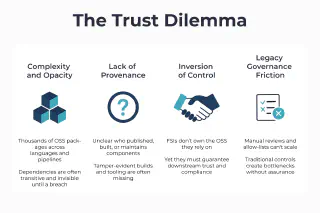

The issue plays out in multiple dimensions:

- Complexity and opacity: Modern FSI systems are built with thousands of OSS packages, often across multiple programming languages, pipelines, and business units. The majority of these dependencies are transitive, invisible until a breach or CVE forces visibility.

- Legacy governance friction: Traditional controls, such as manual reviews, procurement-style security sign-offs, or static approved-lists, simply cannot scale to the speed or granularity needed. They create bottlenecks without guaranteeing actual meaningful assurance.

- Lack of provenance: FSIs may struggle to answer foundational questions: Who published this dependency? When? How was it built? Is it still maintained? This applies as much to external components as to internally built software. Without tamper-evident build processes and provenance-aware tooling, organisations cannot demonstrate that the software they produce is trustworthy, even if all source code is verified. Whilst these gaps were originally classified as operational liabilities, they are slowly turning into regulatory ones under incoming frameworks like the Cyber Resilience Act (CRA).

- Inversion of control: Most critically, FSIs don’t own the components they depend on. OSS is upstream, external, and changeable at any time. Yet they are expected to guarantee the downstream security of the systems they ship to customers and report to regulators. Likewise, they often rely on third-party or cloud-hosted CI/CD and build tooling, introducing additional complexity around platform trust boundaries and signing chains. As a result, FSIs are facing a transformation in how they conceptualise software trust. No longer is it sufficient or feasible to approve software artifacts at the edge, and institutions are now required to design systems that earn and assert trust continuously, across the entire software delivery lifecycle, including the build process itself.

Principles for Rebuilding Trust

To address the FSI trust dilemma, it is no longer feasible to rely on traditional software risk management techniques like vulnerability patching, CVE scanning, or checklist-based approvals, particularly at the scale, velocity, and complexity of today’s software supply chains.

Properly addressing the trust dilemma requires a fundamental rethinking of how trust is established, validated, and sustained across every layer of the software lifecycle, from third-party ingestion and internal development to build pipelines, artefact repositories, deployment infrastructure, and runtime environments.

At its core, this is a shift from approving software artifacts to trusting the systems that produce them. Trust becomes not a one-time assertion, but a continuous, enforceable, and automated property of the entire software supply chain. The following principles guide this evolution:

Trust Is Layered, Not Binary. FSIs cannot rely on the illusion of binary approval (safe/unsafe). Instead, trust must be treated as a layered, evolving posture composed of multiple signals and reinforced at multiple stages:

- Who wrote and maintains this code?

- Where was it built, and using what systems and inputs?

- Was the build process tamper-resistant and auditable?

- What is the blast radius of failure if compromised?

This applies equally to internal builds, external artefacts, and transitive dependencies. Not all components require the same level of scrutiny, but all must be visible, attributable, and subject to policy evaluation across both source and supply chain provenance.

Governance Must Be Continuous and Automated. Legacy governance was episodic and manual and that model simply doesn’t scale. New models must shift from:

- Static allow-lists → Dynamic trust registries

- Snapshot security reviews → Continuous verification

- Manual approvals → Policy-as-code enforcement

Governance is no longer a step in the process; it becomes an always-on control plane spanning code, build, and deploy. Trust decisions are enforced automatically, embedded directly into CI/CD pipelines, build systems, and deployment workflows.

Developers Are the First Line of Security. Security reviews that happen after development are too late and too brittle. Developers must be empowered, not policed, to make secure choices. That means:

- Providing developers with secure software repositories, introducing continuous verification for software packages

- Embedding guardrails into developer tools, not tickets

- Providing secure-by-default templates, not blanket restrictions

- Aligning DevEx with security rather than positioning it in opposition

Crucially, this includes supporting developers through secure-by-design and secure-by-default practices, enabling them to reason about build provenance, dependency health, and policy compliance from within their workflow, not via post-hoc escalation. The developer experience can actually be a critical security control surface.

Platform Engineering Is the Trust Multiplier. Golden paths, internal developer platforms (IDPs), and reusable CI/CD templates allow security to scale without blocking delivery. Platform teams act as enablers of trust, abstracting complexity while embedding policy compliance deep into the infrastructure. Security shifts left, but also down, into the platform layer.

- Build integrity is enforced through hardened pipelines and verifiable artefact generation

- Deployment artefacts are signed, traceable, and governed through trusted registries

- CI/CD templates are version-controlled and security-reviewed like product code

- Workloads are isolated, pipeline steps are ephemeral, and every build step is attested and verifiable

Security shifts left, but also moves down into the delivery fabric, becoming part of the platform itself.

Together, these principles form the foundation for a resilient, scalable software supply chain in highly regulated environments. They do not eliminate risk, but they make it visible, contextual, and progressively manageable through design, automation, and observability.

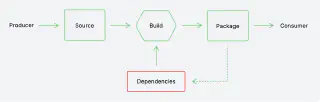

Rethinking the Ingestion Pipeline

The journey from ad hoc software governance to systemic, enforceable trust is already underway across forward-leaning FSIs. But it demands more than updated checklists or better dashboards. It requires a foundational re-architecture of how open source, third-party components, and even internal libraries are ingested, verified, and governed within the software delivery supply chain.

At the center of this rethinking is a recognition: visibility is not enough. Knowing what’s in the software is a start, but not sufficient. What’s needed is the ability to define, verify, and continuously enforce what’s allowed, how it’s built, and who gets to ship it, across all teams, all environments, and all software paths.

Much of the current industry focus is on “visibility”: dashboards, CVE feeds, SBOM exports, but visibility without verifiability leaves critical questions unanswered. Real trust isn’t about seeing everything, it’s about knowing what matters, and having the institutional capability to act on it. This means developing systems where:

- Trust signals are embedded, not overlaid

- Risk is understood in context, not in isolation

- Assurance is earned by process, not assumed by default

What’s needed is a shift from approving individual components to trusting the systems that ingest and validate them. The ingestion pipeline must become a strategic control point, to go beyond recording what’s used, but that enforces how it’s selected, verified, and introduced.

Critically, none of this works if ingestion becomes a red tape choke point. To scale, ingestion policy must be centralised, but its enforcement must be distributed and automated.

That means:

- Defining organisation-wide ingestion policy (e.g. what licence types, maintainers, sources, and maturity levels are acceptable)

- Embedding those policies into shared registries, curated mirrors, or air-gapped artefact gateways

- Automating admission control into CI/CD pipelines, internal registries, and even developer tooling

- Providing teams with tooling that surfaces decisions early, explains rejections, and offers secure-by-default alternatives

This creates a unified system of control, one in which developers are enabled, not blocked, and security is enforced through infrastructure, not human review queues. In this model, ingestion becomes:

- Observable: All artefacts are logged, versioned, and traced to source

- Governed: Policies are defined in code, versioned, and enforced continuously

- Verifiable: All ingested artefacts include attestations tied to build provenance and upstream metadata

- Composable: Risk posture and trust decisions are tailored to service-level criticality

In an actual ocean of dynamic dependencies and transitive risk, what gets ingested defines the perimeter. That perimeter is no longer at the edge of the network, but at the front door of the software supply chain and the build system. Rethinking the ingestion pipeline enables FSIs to:

- Gain a scalable point of control over an otherwise sprawling software graph

- Improved situational awareness of what is entering the organisation, enabling downstream decisions around build integrity, artefact signing, and runtime risk

- A foundation for building a cohesive trust chain across the software lifecycle, where ingestion, build, and deployment are continuously verifiable and governed by policy

Lloyds Banking Group’s Perspective

Renzo Cherin, Head of Software Engineering Centre of Excellence

As one of the UK’s largest and regulated financial institutions, Lloyds Banking Group sits now at the intersection of innovation and assurance. Our digital platform supports millions of customers and billions of secure transactions, and increasingly, software supply chain integrity is emerging as a core business requirement for sustaining that trust.

We have entered the early stages of a focused, long-term transformation, and we are actively exploring how secure open source ingestion, policy-driven governance, and platform-aligned controls can form the foundation of a scalable trust architecture. Our efforts go beyond tooling, and they reflect a broader ambition to embed trust and security into how we build, deliver, and evolve digital services, without compromising on DevX, speed or autonomy.

While regulatory compliance remains a key driver, this is fundamentally an engineering, cultural and architectural shift including:

- Consolidating fragmented tooling, programming languages and governance models

- Aligning platform and development teams under shared trust objectives

- Designing systems where assurance is continuous, contextual, and developer-friendly

In the era of software-defined business and emerging AI technologies, speed and security can’t be any longer trade-offs. They are interdependent. Trust is not what slows delivery down, it’s what enables us to scale it responsibly.

The modernisation work now underway is laying the groundwork for a delivery model that is not only efficient, but increasingly verifiable, resilient, and confidence-driven by design.

Stay tuned for Episode 2, where we turn to the UK public sector, exploring how secure OSS ingestion is being planned and architected in line with the Cabinet Office’s Secure-by-Design mandate.

Related blogs

Check Point and ControlPlane Partner to Help Enterprises Securely Scale AI and Accelerate Agentic Innovation

Open Source Security Risks: Countering the Threat